Proxmox – A free, open source hypervisor

A few posts ago I talked about how I store the majority of my data in my cluster on #unraid, a software based raid operating system that also has a great plugin store and basic hypervisor support. (Hypervisor – a system that controls, monitors, and maintains virtual machines)

What is the limitation of unraid, though? Unraid is a great system to get you started but it only runs on one machine. You can grow your unraid server, it actually is very easy to migrate your installation to bigger hardware if you need it, but what if it's still not enough power? What if you need to host so many applications that one machine is starting to feel the strain?

Then it might be time to start looking into hypervisors that don't run on your data cluster, but instead will connect to it. This was my main problem, I was running a single hypervisor, but was running out of processing capacity for it. Worse, when my hypervisor was under heavy loads since it ran on my data storage, my data access was also slowed, compounding the issue. When my virtual machines could barely perform any tasks I knew I had put it off for too long, it was time to actually plan expansion.

Choosing a Hypervisor

I searched around for a lot of hypervisors. I wasn't opposed to proprietary, but I knew going in this was something that I would be using for years, so an annual or god forbid monthly subscription was out.

On top of not wanting to pay, I wanted this to be an opportunity to start really learning some new things. A lot of hypervisors come with amazing UIs that let you not really know what's happening underneath. This is honestly a great path for those who “just want things to work”, but I really did tell myself that this was the time I was going to learn how these things work, and what feels like an overwhelming UI wasn't going to stop me.

Finally, while Unraid is on Linux, I knew that I would probably finally be forced to confront Linux head on. I would be looking at many types of hypervisors, but I didn't want my uneasiness of Linux to hold me back (and I am very glad I set that expectation now that everything I run is Linux)

So my options were

vmware vSphere

vSphere had really confusing licensing terms, that were very clearly made for business. Their licensing was on a per core basis, which was vyery alarming to me. vmware free was available for personal workstations, which I could probably use on a few computers, but I really wanted a single interface. Plus, I've done the whole “I'll just use the license technically and install 4 different personal use licenses” where you're in a grey area because hey, it is for personal use right? Take it from me, it always backfires. Either they figure it out and revoke your license, or the license changes and then you're frantically trying to change your entire system over. So, vSphere was a non-starter.

Microsoft Hyper-V

Hyper-V is Microsoft's hypervisor, and is built into a surprising amount of Windows versions already, so I had already played with a few times. While it's on most any version of Windows 10/11 now (enabled by turning on the Hyper-V feature), I specifically was looking at the Windows Datacenter Server 2016 – mostly because I already had a license for it – that checked the box of no ongoing payments.

Hypervisor is very manual, it does have the standard Windows interface for adding and managing your VMs, but there was no way to manage them from a remote computer without using remote desktop to manage them. This wasn't a dealbreaker, but it was annoying to me. (There probably is some way to do this in a completely Windows ecosystem that I'm not aware of, but I really do like how web-based interfaces have taken off).

The real dealbreaker was that the license has a clause that I could only use 16 cores on my system. I'm sure this was made earlier on where 16 seemed like a ton of cores and would cover most business needs, but I was looking at buying an AMD Threadripper, a beast of a CPU with a whopping 48 cores – so right there my license for Hyper-V was useless, and my search continued.

Proxmox

So finally I landed at Proxmox. Proxmox is an open source hypervisor that is built on top of Debian Linux. Since it's open source they of course have the community version as the default, with no noticeable differences except buying an enterprise license will get you more tested and stable releases and also some support paths.

Proxmox works both as a standalone hypervisor, using only one node, but what really got my attention was how it supports clusters out-of-the-box. So as my server cluster grows, all I need to do is put them all on proxmox and they'll work together. It also offers a decent UI to manage all of your proxmox nodes from one single pane, which if you remember was nice to have for me. Seeing the health of my entire system without needing to open up a dozen dashboards can really save time.

If you're dipping your toes in to a hypervisor, I always – always recommend taking it for a test drive first. Do not simply dive in headfirst and start spinning up VMs, you must try it out and see how it works, and more importantly what you do when it inevitably fails. So for today, let's just spin up a simple Virtual Machine to play with.

Setting up Storage

Before you can begin, we need to set the stage with storage in Proxmox. Storage consists of a few different types that you can read more fully about here. You can either access your storage settings clicking on “Datacenter” and selecting “Storage”, or if you prefer a terminal edit the file /etc/pve/storage.cfg.

Essentially when setting up “storage” in proxmox you are setting up different locations on your disk, other disks, or network shares. On each of these storages, you will say what type of items will be stored there, things like VM/container disks, images (like ISOs), templates for VMs, and a few others.

Local Storage

Proxmox comes out of the box with two pre-configured. local and local-lvm. They are similar except for one key concept – lvm is thinly provisioned. Meaning items written to local-lvm will only take up the space that has been written to them. local is not thinly provisioned, meaning if you reserve a 20GB drive space, it will take up 20GB. On lvm, it's size would be zero until you start writing to it.

So, when I'm creating new drives for my virtual machines like we will below, I like to use thin provisioning. There is a very small tradeoff with writing speed, but it's negligible. The real risk is that you can over-provision your drive space, which can be a problem. For this guide just take my advice and do not create more disks with sizes than you can handle. There will be future guides on how to... undo the damage if you do overprovision your drive and run out of space.

Network Storage

Now, if you're like me, you already have some big data array with all of your data somewhere else, like on unraid. In proxmox, hooking into this data can be achieved multiple ways. Out of the box, it's simplest to use an NFS or SMB share from your data store and add it as a storage type in proxmox. I like to create a separate area within my datastore and set it up as a storage option in proxmox. I'm not going to go into all of the details yet on this, but check out the proxmox wiki on setting up an NFS or SMB/CIFS shares.

This is how proxmox will know where to find your ISO files to install your operating systems – you'll need to place the ISO files into a storage that you've configured to use “images”. Once you've set one of your storages for that, if you look at the disk/share there will be a templates directory created with a folder iso in there. Copy an ISO file into that directory and proxmox will be able to use it to install an Operating System onto your new drive.

Creating a Virtual Machine

Today let's focus on the simplest task in proxmox, setting up a simple VM. As a classic, I'm going to install Windows XP for pure nostalgia, but you can install any OS you like. (Caveat is Windows 11 requires a bit more setup because of their TPMs, but you can still make it work). So let's get started installing a virtual machine.

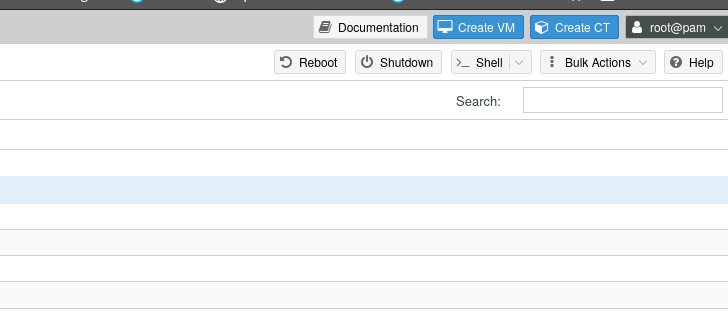

Start by clicking “Create VM”

This will bring up the Create VM wizard.

The first screen is fairly straightforward. Node will be autofilled (I blocked mine out for security), ID should be autogenerated, and Name will be up to you to decide. I recommend coming up with a naming convention, deciding on upper case vs lower case, etc.

The second screen is where we set up what OS options we want to run. For this one I'm selecting that I want to use an ISO file. I select the storage (set up in the previous step here) where I keep my ISOs. I then select the ISO file from the list. Under Guest options I select the values that match the type of operating system I am installing.

Third is “System”, where we can select different types of BIOS and virtual machine types. For now, we're going to leave all of this default – but you may find yourself back in here if you decide to get into things like hardware/GPU passthrough (another topic for another day).

Fourth is “Disks”. Here you will create a virtual disk to use with your new VM. Everything is pretty straightforward – your BUS/Device you want to mimic (defaults to IDE, but you can set SATA or SAS, remember this has nothing to do with your physical connection). Then the storage, remember from above this is your storage that you set up, and where you'll want the disk to live. Then the size. Disks can be expanded later, but the process is a bit arduous. If you're concerned about the space, best just to make sure it has the space here and now.

Next up is “CPU”, where you simply say how many sockets/cores the virtual CPU will have. Personally I've had bad luck setting anything but 1 socket. Cores can be whatever your machine can handle. For this small test one I'll be setting simply 2 cores.

Memory is pretty straightforward, how much RAM do you want to allocate to your machine. Personal experience, you can go over your machine's physical RAM, but the paging will cause massive slowdowns. Just allocate whatever you can safely.

The last configuration page, you will need to set up what networking you want. I won't go into the depths of linux networking, but essentially Proxmox has already set up a bridge for you, usually called vmbr0 or something. This will be connected to your main network. If you use VLANs, you will supply it with a VLAN tag there, but most of you will simply leave it empty. You can finally select the model of the virtual card, this will be helpful if your OS has drivers pre-installed for that model of network card.

Click Next and you'll be greeted with a confirmation page for all of your settings. Once you're happy with them, click “Finish” and your VM will be provisioned!

You'll be able to see your new VM on the left side, under your node now. Everything will be empty. When ready click “Start” in the top right, and then you can view your VM by clicking “Console”. Note you may have to be quick if you need to boot to the “CD”.

That's it! That's a virtual machine running on your own hardware using proxmox. I'll dive in deeper next time to show how I run many virtual machines, but this is at it's core the base of how I run most of my services. Try out a few, get a feel for how it's working.

Coming up next...

Next time we'll touch on more features – backing up, restoring, and more advanced setups. Way later we'll get into very advanced topics allowing your VMs to access hardware like graphics cards which opens up a lot of new doors and exciting items. (AI/LLMs... other interesting project ideas? This here is creating that foundation!)

Proxmox also supports LXCs, or Linux Containers. Another branch of the container space that I'll need to take on some time to fully dive into here.

Proxmox has been now for 5 years the foundational layer of my entire homelab and has served me well. There is a learning curve, but it's an achievable one. If you're starting to feel like you need to host more services than one machine can handle, then it might be time to start looking at solutions like proxmox to handle the workloads you're asking to.

Have fun out there, and happy tinkering!

An example configuration of a RAID configuration in BIOS

An example configuration of a RAID configuration in BIOS